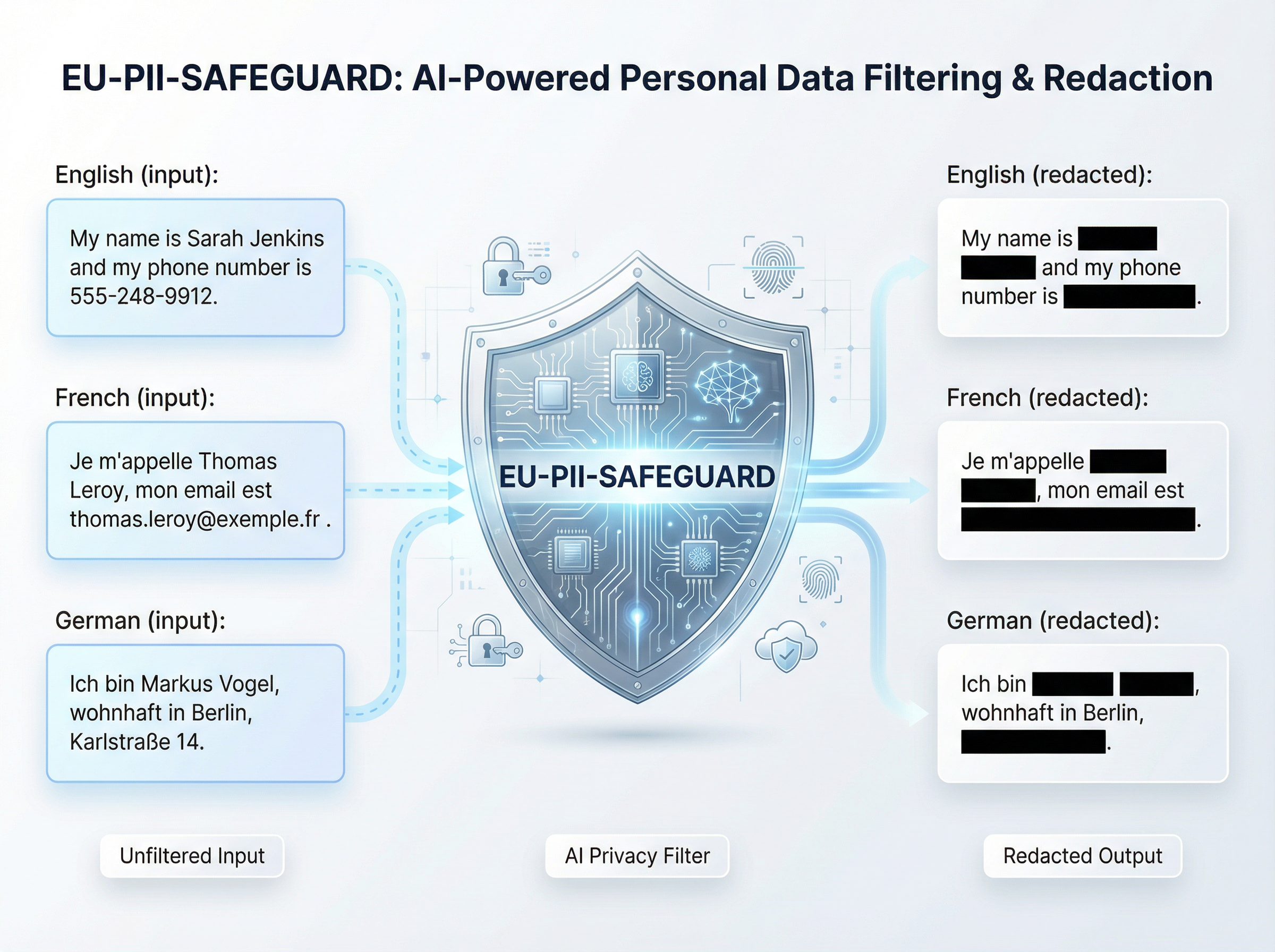

Detecting Personal Data Across All EU Languages

Organizations operating in Europe handle personal data in dozens of languages. GDPR requires knowing where that data lives, but personal data comes in many forms: names, addresses, phone numbers, tax IDs, health records, financial details. Each language has its own formats and conventions.

German addresses look different from French ones. Polish names follow different patterns than Spanish names. A national ID number in Estonia has nothing in common with one from Portugal.

Most PII detection tools were built for English. They struggle with other languages, and they struggle even more when documents mix languages (which happens constantly in multinational organizations).

We built EU PII Safeguard to solve this problem.

What is EU PII Safeguard?

EU PII Safeguard is a machine learning model that reads text and identifies personal information. It supports all 24 official EU languages:

Bulgarian, Croatian, Czech, Danish, Dutch, English, Estonian, Finnish, French, German, Greek, Hungarian, Irish, Italian, Latvian, Lithuanian, Maltese, Polish, Portuguese, Romanian, Slovak, Slovenian, Spanish, Swedish

The model also covers Russian and Ukrainian.

The model detects 42 different types of personal data:

- Personal identifiers: names, age, gender, ethnicity

- Contact information: email, phone, address, city, postal code

- Financial data: credit cards, IBANs, account numbers, salary

- Identity documents: national IDs, passports, driver licenses, tax IDs

- Health information: medical conditions, health insurance IDs

- Digital identifiers: IP addresses, MAC addresses, URLs, usernames

When you run text through the model, it highlights exactly which words or phrases contain personal data and what type they are.

Why This Matters

Consider what happens when you send text to a third-party API, whether that is ChatGPT, an analytics service, or any external tool. If that text contains personal data, you have just transferred it outside your environment. Under GDPR, this creates compliance obligations around data minimization and international transfers.

The safer approach: detect and redact PII before the data leaves.

With EU PII Safeguard, you can run the model on-premise or client-side. The original text with personal data never leaves your environment. Only the anonymized version gets sent to external services.

This is not just about avoiding fines. It is about:

- Lower breach risk: If anonymized data leaks, there is no personal information to expose

- Consistent quality: The same accuracy across all 24 EU languages, not just English

- Operational GDPR: Legal, compliance, and data teams can point to a concrete technical control

Other common use cases

Data pipelines: Anonymize before analytics, ML training, or sending to vendors

Audit and discovery: Scan documents, databases, and logs to find where personal data lives

Real-time protection: Catch PII in support tickets, chat logs, or user-generated content before it gets stored

How It Works

The model is built on XLM-RoBERTa, a multilingual transformer architecture like ChatGPT or Claude that was trained on text from 100 languages. We trained (fine-tuned) it specifically for PII detection across European languages.

The technical approach is called token classification. The model reads text word by word (or more precisely, token by token) and assigns a label to each one: either “not personal data” or one of the 42 PII categories.

This means you get precise, word-level detection. Not just “this document contains a phone number somewhere” but “the phone number is these specific characters in this specific location.”

Trained on Hyper-Realistic Synthetic Data

One unusual aspect of EU PII Safeguard: we trained it primarily on synthetic data.

This might sound counterintuitive. Why not use real data? The answer is that real-world text is messy. It contains inconsistencies, labeling errors, ambiguous cases, and uneven coverage across languages and PII types. If you train on noisy data, your model learns the noise.

However, we make hyperrealistic synthetic data that solves these problems. We generate training examples using advance state-of-the-art techniques where we control exactly what PII appears, where it appears, and how it is labeled. Every example is correct by construction. There are no annotation mistakes, no ambiguous edge cases, no gaps in coverage.

The result is what we call hyper-realistic synthetic data: training examples that look and feel like real text, but with perfect labels and balanced representation across all supported languages and 42 PII types. This approach produces a cleaner signal for the model to learn from.

In practice, this means better generalization aka better performance. The model learns the underlying patterns of what personal data looks like, rather than memorizing quirks of a particular real-world dataset.

Performance

We evaluated the model across all supported languages. The results:

| Language | F1 Score | Language | F1 Score |

|---|---|---|---|

| Irish | 97.98% | Dutch | 97.24% |

| Bulgarian | 97.80% | Slovak | 97.21% |

| Italian | 97.68% | Swedish | 97.09% |

| Portuguese | 97.61% | Russian | 97.04% |

| Slovenian | 97.51% | Croatian | 96.93% |

| Czech | 97.51% | Polish | 96.63% |

| Hungarian | 97.50% | French | 96.59% |

| Estonian | 97.41% | Romanian | 96.54% |

| Latvian | 97.40% | Danish | 96.36% |

| English | 97.36% | German | 96.22% |

| Spanish | 97.34% | Ukrainian | 96.09% |

| Finnish | 97.30% | Maltese | 95.78% |

| Lithuanian | 97.24% | Greek | 95.42% |

The global F1 score is 97.02%. All languages score above 95%.

For context, F1 score measures both precision (did we correctly identify PII?) and recall (did we find all the PII?). A score above 95% means the model catches nearly everything while making very few false positives.

Quick Start

The model is available on Hugging Face: huggingface.co/tabularisai/eu-pii-safeguard

Basic usage:

from transformers import AutoTokenizer, AutoModelForTokenClassification

import torch

model_name = "tabularisai/eu-pii-safeguard"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForTokenClassification.from_pretrained(model_name)

text = "Hello, I am Marie Dubois from Paris. Email: [email protected]"

inputs = tokenizer(text, return_tensors="pt", truncation=True)

with torch.no_grad():

outputs = model(**inputs)

predictions = torch.argmax(outputs.logits, dim=-1)

# Print detected entities

tokens = tokenizer.convert_ids_to_tokens(inputs["input_ids"][0])

labels = [model.config.id2label[p.item()] for p in predictions[0]]

for token, label in zip(tokens, labels):

if label != "O":

print(f"{label}: {token}")Result:

Getting Started

- Visit the Hugging Face model page

- Accept the terms to get access

- Try it on your own text data

For enterprise integrations, custom deployments, or questions about licensing, contact us at [email protected].

Community

We are actively collecting feedback:

- Report edge cases or errors you find

- Share your use cases and results

- Suggest improvements or additional PII types

The model improves with real-world feedback. If you find something it misses, that information helps us make the next version better.

Join our Discord to share feedback, ask questions, or discuss your use case:

EU PII Safeguard is developed by Tabularis AI. We build privacy-preserving AI solutions for enterprise data protection.